Update: There is now a recording of the meetup up on YouTube.

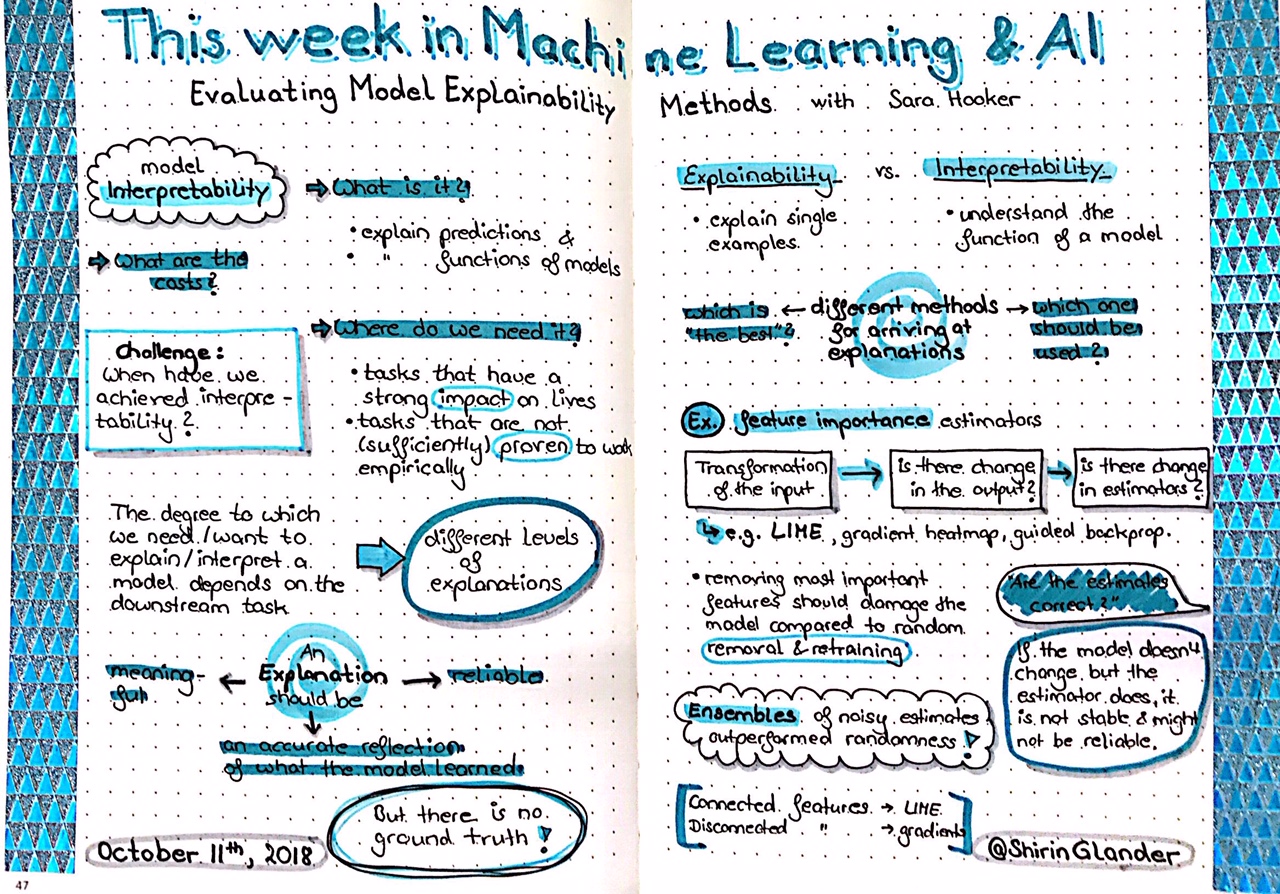

Here you find my slides the TWiML & AI EMEA Meetup about Trust in ML models, where I presented the Anchors paper by Carlos Guestrin et al..

I have also just written two articles for the German IT magazin iX about the same topic of Explaining Black-Box Machine Learning Models:

A short article in the iX 12/2018