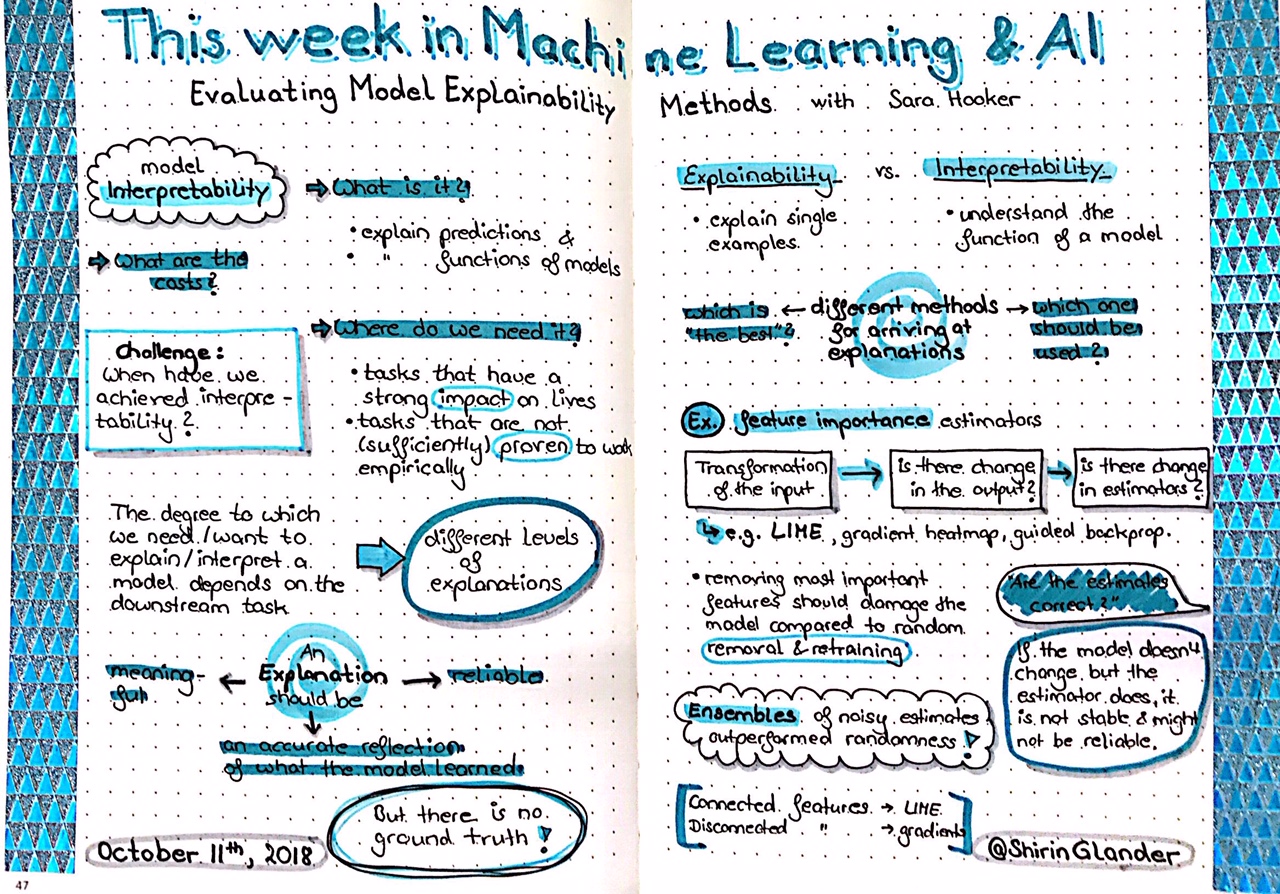

At the upcoming This week in machine learning and AI European online Meetup, I’ll be presenting and leading a discussion about the Anchors paper, the next generation of machine learning interpretability tools. Come and join the fun! :-)

Date: Tuesday 4th December 2018 Time: 19:00 PM CET/CEST Join: https://twimlai.com/meetups/trust-in-predictions-of-ml-models/