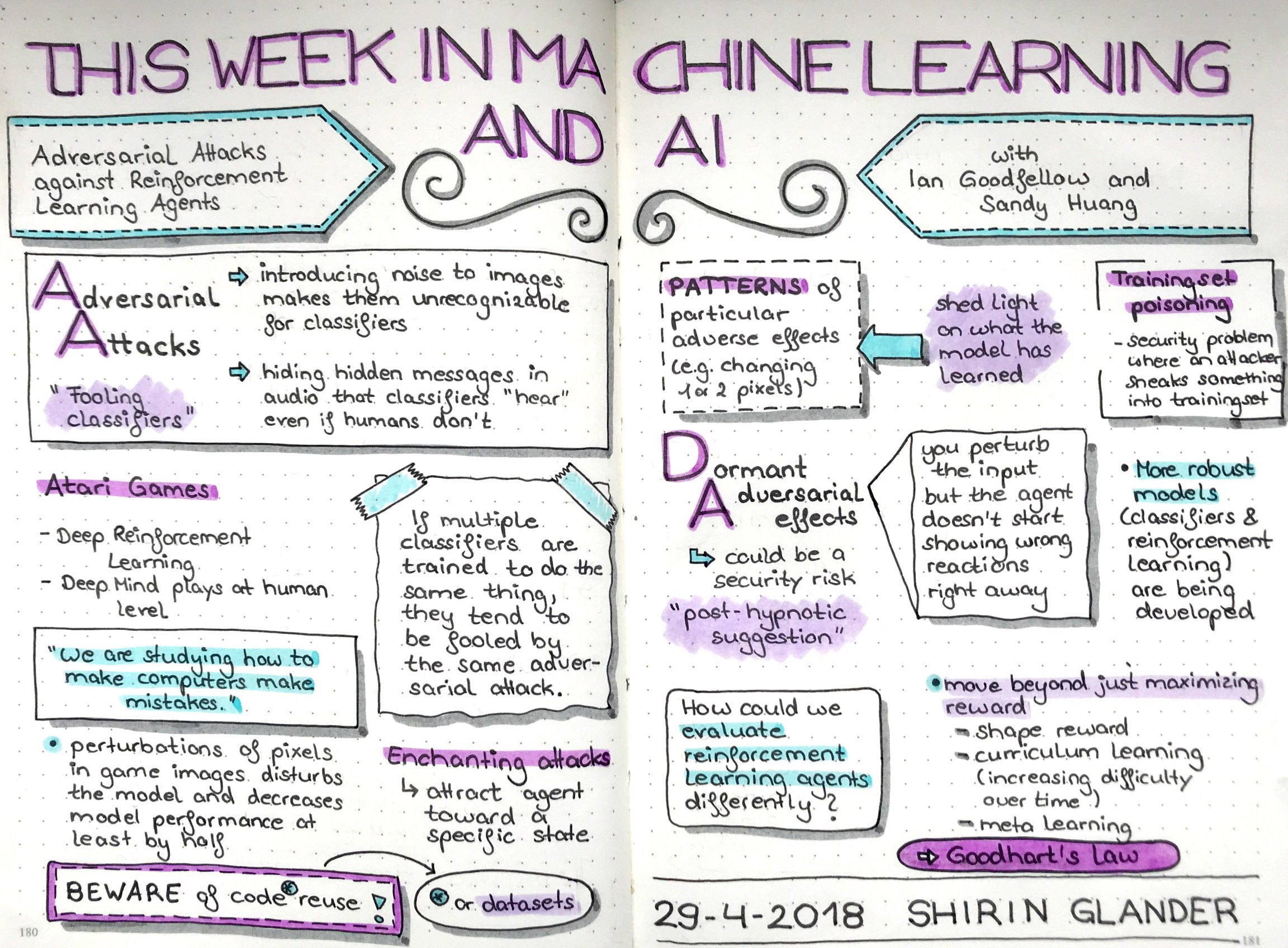

These are my sketchnotes for Sam Charrington’s podcast This Week in Machine Learning and AI about Adversarial Attacks Against Reinforcement Learning Agents with Ian Goodfellow & Sandy Huang:

Sketchnotes from TWiMLAI talk: Adversarial Attacks Against Reinforcement Learning Agents with Ian Goodfellow & Sandy Huang

You can listen to the podcast here.

In this episode, I’m joined by Ian Goodfellow, Staff Research Scientist at Google Brain and Sandy Huang, Phd Student in the EECS department at UC Berkeley, to discuss their work on the paper Adversarial Attacks on Neural Network Policies.