Today I am very happy to announce that during my stay in London for the m3 conference, I’ll also be giving a talk at the R-Ladies London Meetup on Tuesday, October 16th, about one of my favorite topics: Interpretable Deep Learning with R, Keras and LIME.

You can register via Eventbrite: https://www.eventbrite.co.uk/e/interpretable-deep-learning-with-r-lime-and-keras-tickets-50118369392

ABOUT THE TALK

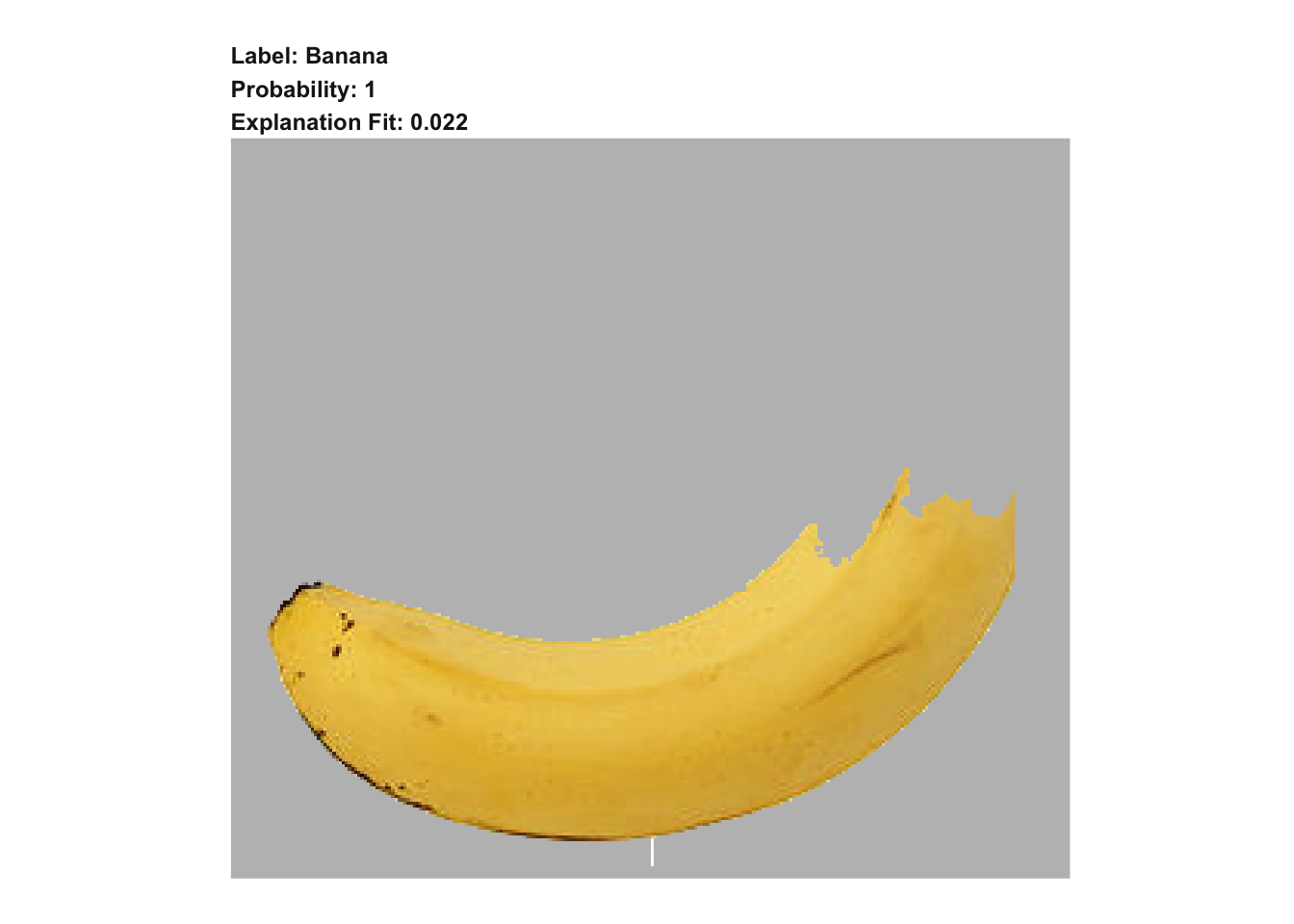

Keras is a high-level open-source deep learning framework that by default works on top of TensorFlow.